I deal with a lot of user uploaded images and videos at Thankbox. It's an online group card service and my users have the option of attaching GIFs or uploading their own images or videos to a message they leave their friends.

On busy days I could have around 300-500 media items uploaded in a single day.

When I started Thankbox I was just storing all of these files in Cloudinary, happily using their free tier. As the product started scaling, though, I realized that even on their paid tier I cannot just keep storing these files there indefinitely since I would really quickly eat up my credits (the way Cloudinary measures usage).

My solution was to leverage S3 as a long-term cheaper storage solution. In this post I will outline how I did that.

Cloudinary-first

I use Cloudinary as my main mechanism of serving uploaded images and videos. It's a really awesome service. It has great SDKs for both Vue and PHP, which are what Thankbox is written in. I also heavily leverage it's upload manipulations to keep uploaded file sizes small - downsizing them to the max resolution necessary before storing them.

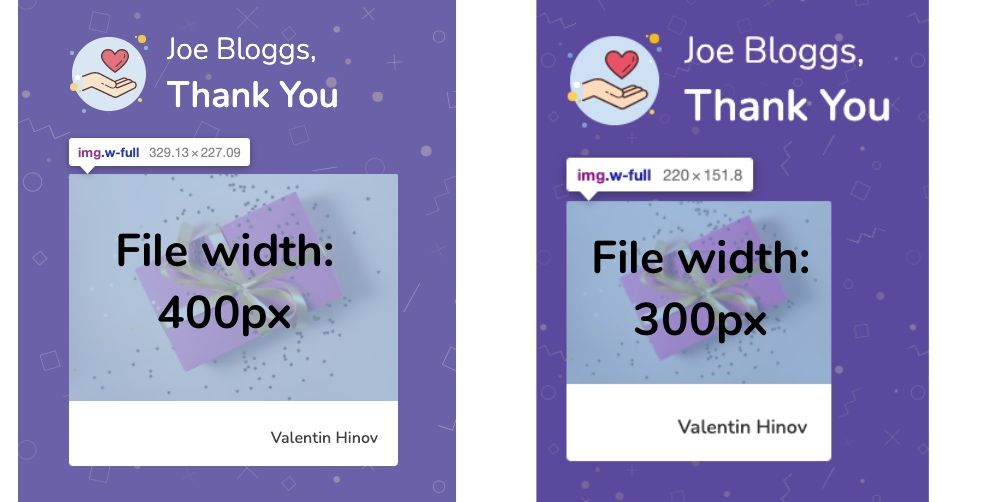

Finally, and most importantly, I love Cloudinary's dynamic transformations. One example of that is that I use them to only serve uploaded images at the appropriate size. When I show an image I pick the closest width multiple of 100 to download for it:

In the example above, I'd download a 400-width version of the image for the 329-wide div but a 300-width version for the 220 one. I round up, not down, to the closest 100-width multiple since I don't want users seeing a pixelated image.

This is both saves my users bandwidth and aids in assets loading quicker. Cloudinary cache dynamically transformed assets. That means that any future request to load the same image at the 400 or 300-width sizes will receive a cached version. Awesome, right?

Note: I do not apply the same approach to videos. Since users can choose to view those full screen I want them available at the maximum resolution I support. It's a good trade-off as users don't upload videos that much.

Cloudinary costs can creep up

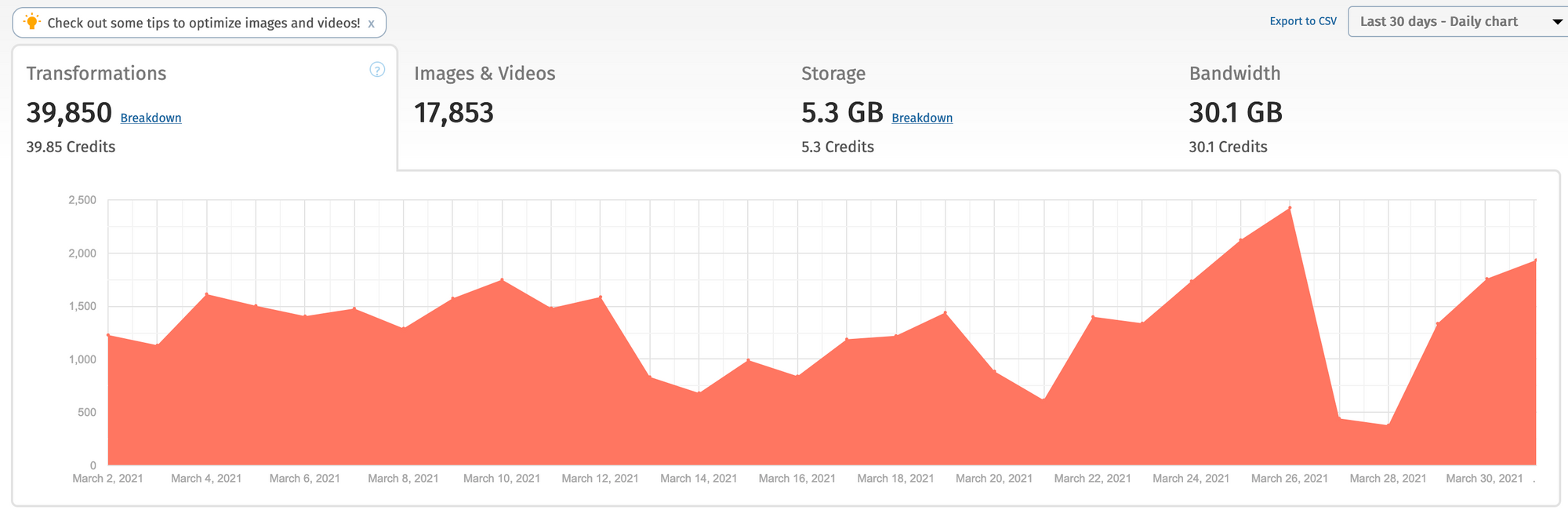

Cloudinary billing works with what they call "credits". They track your rolling usage over a 30-day period and determine how many credits you've used based on:

- The total number of transformations you've made

- The net viewing bandwidth they've delivered

- The total amount of managed storage they hold for you

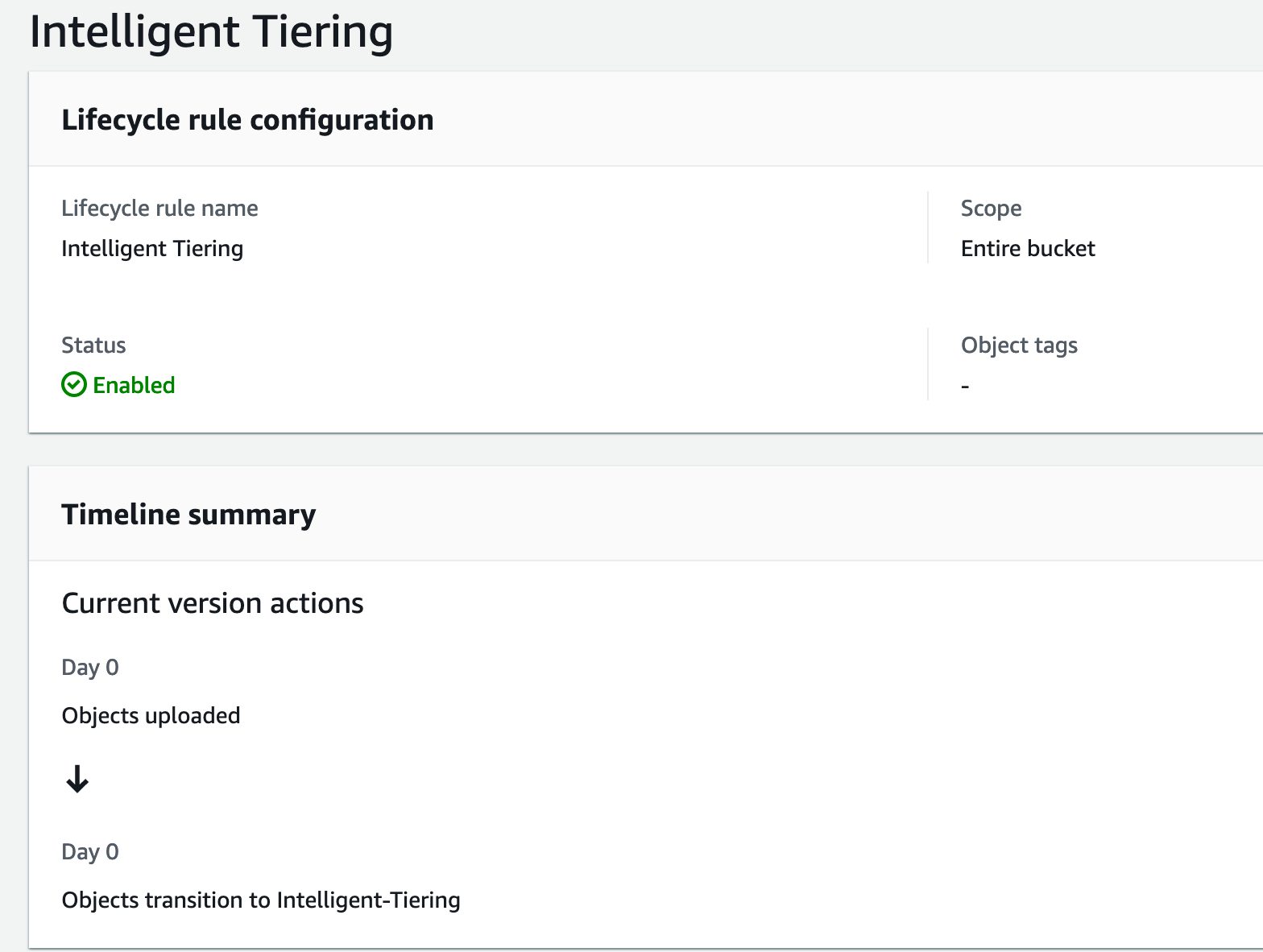

Here is an example of a 30-day usage pattern:

As you can see, Thankbox does a lot of transformations and uses quite a bit of bandwidth. At the root of both of these things, though, is Storage. After all, those transformations and that bandwidth results from the uploaded media you store. The more assets you have with Cloudinary, the more you have to transform and the more you have to serve.

When I noticed my credits creeping up I decided that I needed a way to control my storage. I couldn't just keep storing assets in Cloudinary forever - I'm not enterprise-level.

I don't need to store everything in Cloudinary forever

That is when I came to a realization - I don't need all of my uploaded files to be in Cloudinary - just the recent, most active ones.

This is where the usage patterns of Thankbox helped me out. A Thankbox's active lifespan is usually less than 30 days - from being created, to people filling it with messages and pictures, to it being sent and seen by the recipient. After that point the chances that someone will open it again fall drastically. It's essentially in long-term storage.

"Aha!" - I thought - "Long-term storage is exactly what I need!"

Enter AWS S3 - my cheap long-term storage solution

I already had S3 in place as it was being used for various other Thankbox functionality. I was already hosting images on it, as well. Whenever a Thankbox is sent I generate a high-resolution PNG of it for the recipient to download. That is stored and served from S3 for several days before being automatically deleted when it's no longer needed.

S3 is great, cheap and integrates easily with Laravel, my web framework. It's even cheaper if you leverage it's infrequent-access (IA) storage class. You can cut your storage bills dramatically - instead of paying $0.023 per GB you pay $0.0125. There would be downsides to using S3, of course. First off - objects which I'd have in IA will take a bit longer to load when viewed - though in practise that's hardly noticeable. Secondly, I'd lose the benefit of Cloudinary's dynamic image transformations and would have to serve images at their max size.

That was fine with me since, as I mentioned, the photos and videos I'd be putting there have a pretty low chance of being accessed. I was OK that the loading experience might be a little bit slow for the few users that would end up loading them.

The solution

So now I had my solution and it looked like it would be pretty straightforward to implement it. I decided that whenever a Cloudinary-hosted image asset is over a month old I'd move it to S3. That means that, during its active lifetime, it would benefit from being served from Cloudinary and after that I can retire it to S3 for cheap long-term storage.

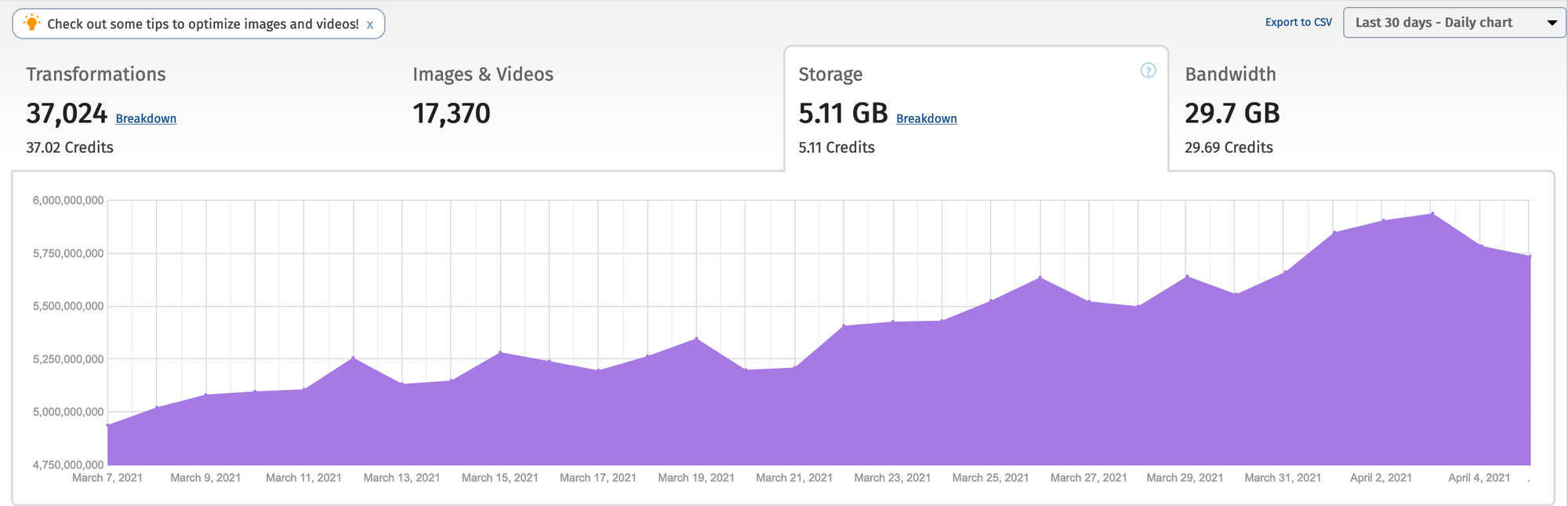

I created an S3 bucket and put it behind a CloudFront instance to make use of a CDN. I then applied a Lifecycle rule to it to move all uploaded files to Intelligent-Tiering as soon as possible.

Note that this doesn't move files to the Infrequent Access storage tier immediately. What happens instead is that S3 starts monitoring the uploaded files and, once it notices that a file hasn't been accessed for 30 days, it moves it to IA. After that if the file is accessed it will move it back to the regular "Frequent Access" tier.

The way I technically implemented this was by scheduling a task to run every night on my server that does the following:

- Get assets hosted on Cloudinary which are older than a month. For each asset:

- Download it from Cloudinary at the source (max) resolution.

- Upload it to S3 and re-point the database entry to point to the new Cloudfront link.

- Delete it from Cloudinary.

For the coders here is the Laravel PHP code that does this:

$num_media = (int) ($this->option('num-media') ?? 1500);

Media::whereSourceProvider('cloudinary')

->where('created_at', '<', now()->subMonth())

->orderBy('created_at')

->select(['id', 'source_id', 'type'])

->take($num_media) // cloudinary has an API limit of 2000/hour so lets put a sensible limit here

->get()

->each(fn (Media $media) => $this->moveMediaToS3($media));A lovely solution in less than 10 lines of code - just the way I like it! Obviously, the actual moveMediaToS3 method is more involved but it's still fairly concise thanks to the great Laravel S3 integration and the Cloudinary PHP SDK. If you want to know more about my tech stack, I wrote about it here.

Best of both worlds - low ongoing Cloudinary storage and cheap S3 long-term storage

I've been using this approach for a few months now and the results are great! I am staying well below my Cloudinary plan limits and, even though my product continues to scale, the storage demands don't go up that quickly.

You can see the dips in storage every so often - this is mostly because Thankbox has quiet periods on the weekend which means I am moving more assets off Cloudinary those days than putting in.

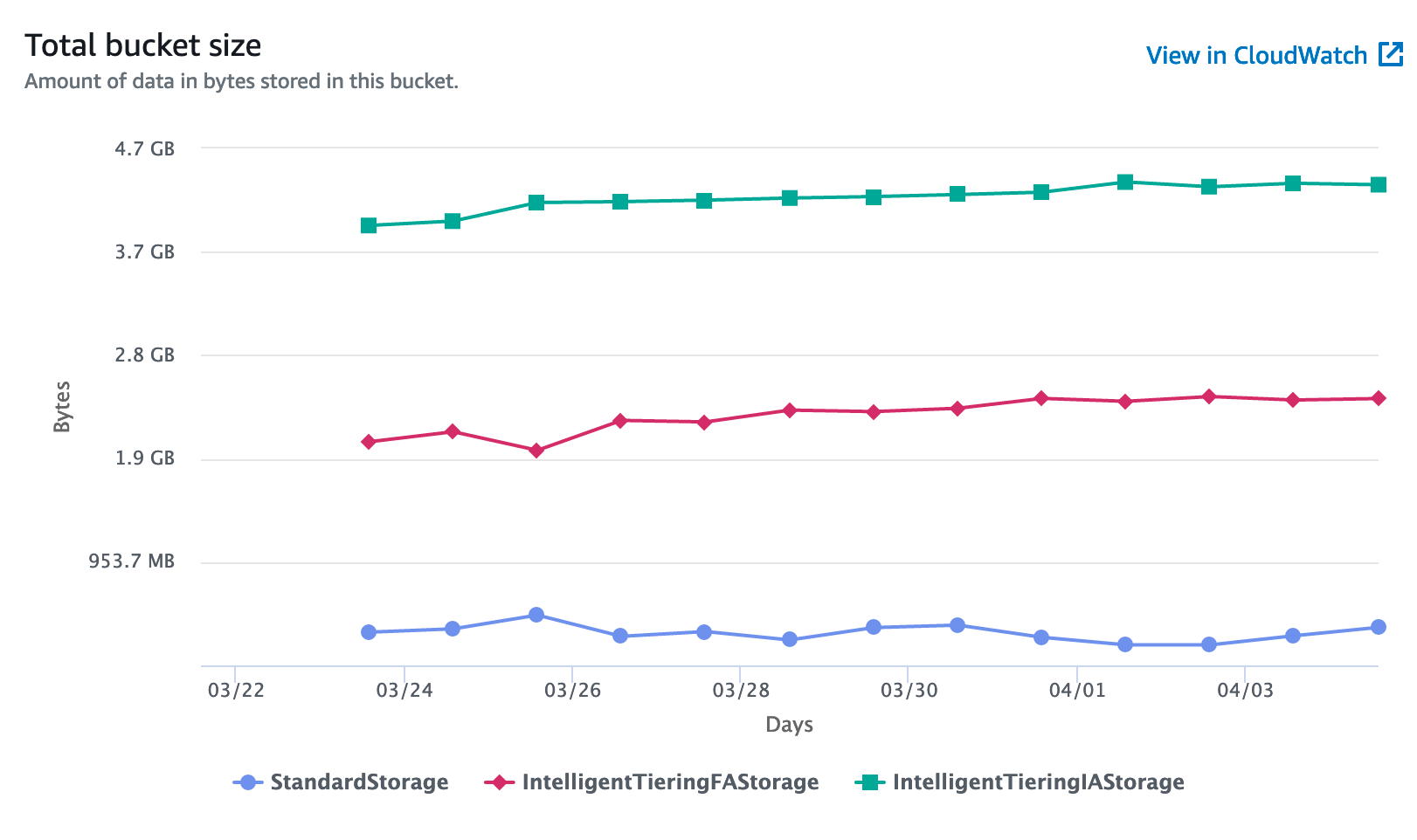

It's a good story for my S3 graph as well - most objects are on Intelligent Tiering (green line).

The red and blue lines represent objects on the standard S3 tier, most of which will eventually transition to IA. Even if they don't, the standard S3 pricing is still far far cheaper than having these on Cloudinary.

I think that this simple, elegant solution would be able to carry me for a good while until I hit a scale where it would need to be revisited.